At Screenly, we have always been a security-first digital signage company. To double down on that commitment and streamline responsible disclosure for researchers, we officially launched Screenly’s Bug Bounty & Vulnerability Disclosure Program on September 25, 2025.

In this post, I’ll break down the program details and share some of the challenges we’ve encountered so far. I’d also like to acknowledge our CTO, Renat Galimov, for his contributions to this post and the program itself.

Why we launched a formal program

We take security seriously because digital signage is unique: it runs in public spaces, often sits on sensitive networks, and is managed at scale. Security isn’t a one-time box to check; it is a continuous operation.

Our goal with this program is to turn external feedback into a reliable engine for improvement and to properly reward researchers for their hard work.

How does Screenly’s Bug Bounty program work?

We have established clear “rules of engagement” regarding test sites, third-party services, DDoS attacks, social engineering, and physical intrusion. Note that we do not accept reports on known issues that pose no tangible risk or purely cosmetic problems.

For full details on scope and reporting standards, please review our Bug Bounty & Vulnerability Disclosure Policy.

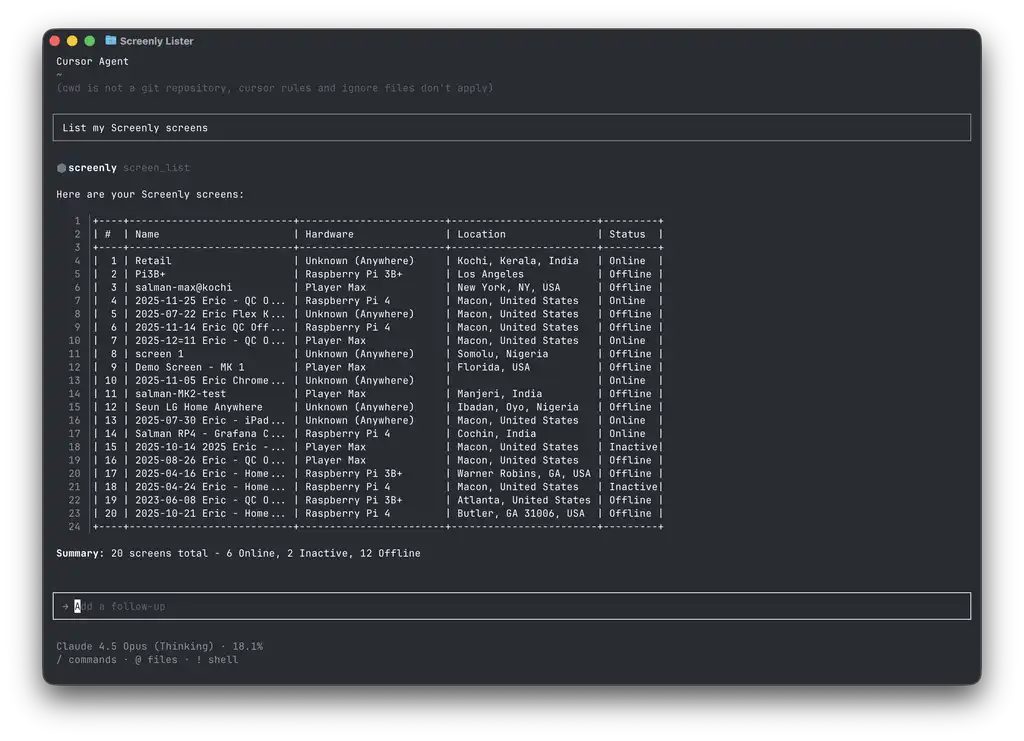

The AI challenge: Interpreting signal vs. noise

Shortly after launch, reports started rolling in. However, we immediately noticed a high volume of submissions that appeared to be AI-generated or AI-assisted. This resulted in an influx of low-quality, unproven reports that consumed reviewer time and distracted us from legitimate security findings.

This isn’t a problem unique to Screenly. The curl project recently announced the end of its bug bounty program due to a similar surge in low-quality submissions. In their announcement, they described “an explosion in AI slop reports,” noting that even reports that looked legitimate at a glance were often AI hallucinations.

While we can’t stop researchers from using AI tools, we are focusing on building better filters and refining our review process. It takes time, but preserving the signal-to-noise ratio is vital for the program’s health.

What we changed after launch

To improve efficiency and response times, we implemented the following changes:

1. Clearer handling of mixed and chained reports

We noticed researchers combining unrelated issues—sometimes mixing non-security bugs with potential vulnerabilities—into a single submission. To keep reviews clean and efficient, we now require one vulnerability per report. If you have related findings or chained exploits, please submit the details separately or clearly distinguish them.

2. A better system for managing researcher communications

Running a bounty program requires as much communication as it does technical analysis. As volume increased, long email threads began to create bottlenecks.

We have since set up better processes to manage security inboxes, track report statuses, and deduplicate submissions. This allows our team to spend less time managing threads and more time verifying and fixing issues.

3. A more structured operating cadence

We want to set clear expectations, even during high-volume periods. We have established a regular schedule for triage and updates so researchers know when to expect a response, allowing our internal teams to maintain focus on critical tasks.

4. Non-vulnerability reports

Like any software company, we have a backlog of low-priority bugs—issues that users rarely encounter and that carry no security risk. Historically, we prioritized new features over these minor fixes.

However, once the bounty program launched, these minor bugs began attracting an unhealthy amount of attention from researchers hoping for a payout. To reduce the noise, we decided to fix many of these minor issues simply to clear the board. That said, we want to remind researchers: we pay for security disclosures, not QA for minor functional bugs.

It’s just one part of our broader security efforts

A disclosure program works best when it sits on top of strong security fundamentals. For Screenly, that means a secure platform, hardened system configurations, and transparent reporting channels.

Our main takeaway is that we want to reward actionable, high-value findings. We are working to discourage low-effort “spray and pray” submissions so we can resolve real issues faster.

Transparency is key. Recently, we received a report regarding a potential data leak. We conducted a thorough investigation and published our findings in a public blog post. This reflects our commitment to being open with both our customers and the research community.

A note to researchers

Thank you to everyone who helps keep Screenly secure by reporting issues responsibly.

If you find a vulnerability, please visit our Bug Bounty page for details on what is in scope and how to report it.